SNIA recently hosted a multi-vendor discussion on leveraging the cloud for data protection. If you missed the Webcast, “Moving Data Protection to the Cloud: Trends, Challenges and Strategies”, it’s now available on-demand. As promised during the live event, we’ve compiled answers to some of the most frequently asked questions on this timely topic. Answers from SNIA as well as our vendor panelists are included. If you have additional questions, please comment on this blog and we’ll get back to you as soon as possible

Q. What is the significance of NIST FIPS 140-2 Certification?

Acronis: FIPS 140-2 Certification is a requirement by certain entities to use cloud-based solutions. It is important to understand the customer you are going after and whether this will be a requirement. Many small businesses do not require FIPS but certain do.

Asigra: Organizations that are looking to move to a cloud-based data protection solution should strongly consider solutions that have been validated by the National Institute of Standards and Technology (NIST), an agency of the U.S. Department of Commerce, as this certification represents that the solution has been tested and maintains the most current security requirement for cryptographic modules, or encryption. It is important to validate that the data is encrypted at rest and in flight for security and compliance purposes. NIST issues numbered certificates to solution providers as the validation that their solution was tested and approved.

SolidFire: FIPS 140-2 has 4 levels of security, 1- 4 depending on what the application requires. FIPS stands for Federal Information Processing Standard and is required by some non-military federal agencies for hardware/software to be allowed in their datacenter. This standard describes the requirements for how sensitive but unclassified information is stored. This standard is focused on how the cryptographic modules secure information for these systems.

Q. How do you ensure you have real time data protection as well as protection from human error? If the data is replicated, but the state of the data is incorrect (corrupt / deleted)… then the DR plan has not succeeded.

SNIA: The best way to guard against human error or corruption is with regular point-in-time snapshots; some snapshots can be retained for a limited length of time while others are kept for as long as the data needs to be retained. Snapshots can be done in the cloud as well as in local storage.

Acronis: Each business needs to think through their retention plan to mitigate such cases. For example, they would run 7 daily backups, 4 weekly backups, 12 monthly backups and one yearly backup. In addition it is good to have a system that allows one to test the backup with a simulated recovery to guarantee that data has not been corrupted.

Asigra: One way for organizations that are migrating to SaaS based applications like Google Apps, Microsoft Office 365 and Salesforce.com to protect their data created and stored in these applications is to consider a cloud-based data protection solution to back up the data from these applications to a third party cloud to meet the unique data protection requirements of your organization. You need to take the responsibility to protect your data born in the cloud much like you protect data created in traditional on premise applications and databases. The responsibility for data protection does not move to the SaaS application provider, it remains with you.

For example user error is one of the top ways that data is lost in the cloud. With Microsoft Office 365 by default, deleted emails and mailboxes are unrecoverable after 30 days; if you cancel your subscription, Microsoft deletes all your data after 90 days; and Microsoft’s maximum liability is $5000 US or what a customer paid during the last 12 months on subscription fees – assuming you can prove it was Microsoft’s fault. All the more reason you need to have a data protection strategy in place for data born in the cloud.

SolidFire: You need to have a technology that provides a real-time asynchronous replication technology achieving a low RPO that does not rely on snapshots. Application consistent snapshots must be used concurrently with a real-time replication technology to achieve real time and point in time protection. For the scenario of performing a successful failover, but then you have corrupted data. With application consistent snapshots at the DR site you would be able to roll back instantly to a point in time when the data and app was in a known good state.

Q. What’s the easiest and most effective way for companies to take advantage of cloud data protection solutions today? Where should we start?

SNIA: The easiest way to ease into using cloud storage is to either (1) use the direct cloud interface of your backup software if it has one to set up an offsite backup, or (2) use a cloud storage gateway that allows public or private cloud storage to appear as another local NAS resource.

Acronis: The easiest way is to use a solution that supports both cloud and on premises data protection. Then they can start by backing up certain workloads to the cloud and adding more over time. Today, we see that many workloads are protected with both a cloud and on premise copy.

Asigra: Organizations should start with non-production, non-critical workloads to test the cloud-based data protection solution to ensure that it meets their needs before moving to critical workloads. Identifying and understanding their corporate requirements for a public, private and/or hybrid cloud architecture is important as well as identifying the workloads that will be moved to the cloud and the timing of this transition. Also, organizations may want to consult with a third party IT Solutions Provider who has the expertise and experience with cloud-based data protection solutions to explore how others are leveraging cloud-based solutions, as well as conduct a data classification exercise to understand which young data needs to be readily available versus older data that needs to be retained for longer periods of time for compliance purposes. It is important that organizations identify their required Recovery Time Objectives and Recovery Point Objectives when setting up their new solution to ensure that in the event of a disaster they are able to meet these requirements. Tip: Retain the services of a trusted IT Solution Provider and run a proof of concept or test drive the solution before moving to full production.

SolidFire: Find a simple and automated solution that fits into your budget. Work with your local value added reseller of data protection services. The best thing to do is NOT wait. Even if it’s something like carbonite… it’s better than nothing. Don’t get caught off guard. No one plans for a disaster.

Q. Is it sensible to move to a pay-as-you-go service for data that may be retained for 7, 10, 30, or even 100 years?

SNIA: Long term retention does demand low cost storage of course, and although the major public cloud storage vendors offer low pay-as-you-go costs, those costs can add up to significant amounts over a long period of time, especially if there is any regular need to access the data. An organization can keep control over the costs of long term storage by setting up an in-house object storage system (“private cloud”) using “white box” hardware and appropriate software such a what is offered by Cloudian, Scality, or Caringo. Another way to control the costs of long-term storage is via the use of tape. Note that any of these methods — public cloud, private cloud, or tape — require an IT organization, or their service provider to regularly monitor the state of the storage and periodically refresh it; there is always potential over time for hardware to fail, or for the storage media to deteriorate resulting in what is called bit rot.

Acronis: The cost of storage is dropping dramatically and will continue to do so. The best strategy is to go with a pay as you go model with the ability to adjust pricing (downward) at least once a year. Buying your own storage will lock you into pricing over too long of a period.

SolidFire: The risk of moving to a pay-as-you-go service for that long is that you lock your self in for as long as you need to keep the data. Make sure that contractually you can migrate or move the data from them, even if it’s for a fee. The sensible part is that you can contract that portion of your IT needs out and focus on your business and advancing it…. Not worrying about completing backups on your own.

Q. Is it possible to set up a backup so that one copy is with one cloud provider and another with a second cloud provider (replicated between them, not just doing the backup twice) in case one cloud provider goes out of business?

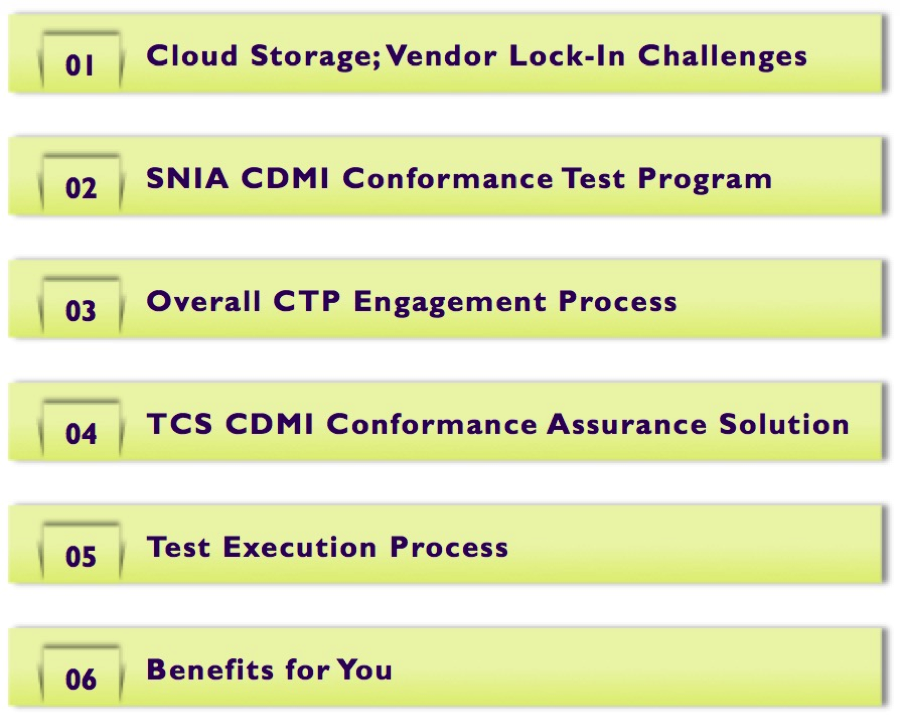

SNIA: Standards like the SNIA’s CDMI (Cloud Data Management Interface) make replication between different cloud vendors pretty straightforward, since CDMI provides a data and metadata neutral way of transferring data; and provides both standard and extensible metadata to control policy too.

Acronis: Yes, this is possible but this is not a good strategy to mitigate a provider going out of business. If that is a concern then pick a provider you trust and one where you control where the data is stored. Then you can easily switch provider if needed.

SolidFire: Yes setting up a DR site and a tertiary site is very doable. Many data protection software companies available do this for you with integrations at the cloud providers. When looking at data protection technology make sure their policy engine is capable of being aware of multiple targets and moving data seamlessly between them. If you’re worried about cloud service providers going out of business make sure you bet on the big ones with proven success and revenue flow.